Download Professional Data Engineer on Google Cloud Platform.Professional-Data-Engineer.ExamTopics.2025-05-07.318q.vcex

| Vendor: | |

| Exam Code: | Professional-Data-Engineer |

| Exam Name: | Professional Data Engineer on Google Cloud Platform |

| Date: | May 07, 2025 |

| File Size: | 2 MB |

How to open VCEX files?

Files with VCEX extension can be opened by ProfExam Simulator.

Discount: 20%

Demo Questions

Question 1

Your company built a TensorFlow neutral-network model with a large number of neurons and layers. The model fits well for the training data. However, when tested against new data, it performs poorly. What method can you employ to address this?

- Threading

- Serialization

- Dropout Methods

- Dimensionality Reduction

Correct answer: C

Question 2

Your company is in a highly regulated industry. One of your requirements is to ensure individual users have access only to the minimum amount of information required to do their jobs. You want to enforce this requirement with Google BigQuery. Which three approaches can you take? (Choose three.)

- Disable writes to certain tables.

- Restrict access to tables by role.

- Ensure that the data is encrypted at all times.

- Restrict BigQuery API access to approved users.

- Segregate data across multiple tables or databases.

- Use Google Stackdriver Audit Logging to determine policy violations.

Correct answer: BDE

Question 3

You have a requirement to insert minute-resolution data from 50,000 sensors into a BigQuery table. You expect significant growth in data volume and need the data to be available within 1 minute of ingestion for real-time analysis of aggregated trends. What should you do?

- Use bq load to load a batch of sensor data every 60 seconds.

- Use a Cloud Dataflow pipeline to stream data into the BigQuery table.

- Use the INSERT statement to insert a batch of data every 60 seconds.

- Use the MERGE statement to apply updates in batch every 60 seconds.

Correct answer: B

Question 4

You need to copy millions of sensitive patient records from a relational database to BigQuery. The total size of the database is 10 TB. You need to design a solution that is secure and time-efficient. What should you do?

- Export the records from the database as an Avro file. Upload the file to GCS using gsutil, and then load the Avro file into BigQuery using the BigQuery web UI in the GCP Console.

- Export the records from the database as an Avro file. Copy the file onto a Transfer Appliance and send it to Google, and then load the Avro file into BigQuery using the BigQuery web UI in the GCP Console.

- Export the records from the database into a CSV file. Create a public URL for the CSV file, and then use Storage Transfer Service to move the file to Cloud Storage. Load the CSV file into BigQuery using the BigQuery web UI in the GCP Console.

- Export the records from the database as an Avro file. Create a public URL for the Avro file, and then use Storage Transfer Service to move the file to Cloud Storage. Load the Avro file into BigQuery using the BigQuery web UI in the GCP Console.

Correct answer: B

Question 5

You need to create a near real-time inventory dashboard that reads the main inventory tables in your BigQuery data warehouse. Historical inventory data is stored as inventory balances by item and location. You have several thousand updates to inventory every hour. You want to maximize performance of the dashboard and ensure that the data is accurate. What should you do?

- Leverage BigQuery UPDATE statements to update the inventory balances as they are changing.

- Partition the inventory balance table by item to reduce the amount of data scanned with each inventory update.

- Use the BigQuery streaming the stream changes into a daily inventory movement table. Calculate balances in a view that joins it to the historical inventory balance table. Update the inventory balance table nightly.

- Use the BigQuery bulk loader to batch load inventory changes into a daily inventory movement table. Calculate balances in a view that joins it to the historical inventory balance table. Update the inventory balance table nightly.

Correct answer: C

Question 6

You have a data stored in BigQuery. The data in the BigQuery dataset must be highly available. You need to define a storage, backup, and recovery strategy of this data that minimizes cost. How should you configure the BigQuery table that have a recovery point objective (RPO) of 30 days?

- Set the BigQuery dataset to be regional. In the event of an emergency, use a point-in-time snapshot to recover the data.

- Set the BigQuery dataset to be regional. Create a scheduled query to make copies of the data to tables suffixed with the time of the backup. In the event of an emergency, use the backup copy of the table.

- Set the BigQuery dataset to be multi-regional. In the event of an emergency, use a point-in-time snapshot to recover the data.

- Set the BigQuery dataset to be multi-regional. Create a scheduled query to make copies of the data to tables suffixed with the time of the backup. In the event of an emergency, use the backup copy of the table.

Correct answer: C

Question 7

You used Dataprep to create a recipe on a sample of data in a BigQuery table. You want to reuse this recipe on a daily upload of data with the same schema, after the load job with variable execution time completes. What should you do?

- Create a cron schedule in Dataprep.

- Create an App Engine cron job to schedule the execution of the Dataprep job.

- Export the recipe as a Dataprep template, and create a job in Cloud Scheduler.

- Export the Dataprep job as a Dataflow template, and incorporate it into a Composer job.

Correct answer: D

Question 8

You want to automate execution of a multi-step data pipeline running on Google Cloud. The pipeline includes Dataproc and Dataflow jobs that have multiple dependencies on each other. You want to use managed services where possible, and the pipeline will run every day. Which tool should you use?

- cron

- Cloud Composer

- Cloud Scheduler

- Workflow Templates on Dataproc

Correct answer: B

Question 9

You are managing a Cloud Dataproc cluster. You need to make a job run faster while minimizing costs, without losing work in progress on your clusters. What should you do?

- Increase the cluster size with more non-preemptible workers.

- Increase the cluster size with preemptible worker nodes, and configure them to forcefully decommission.

- Increase the cluster size with preemptible worker nodes, and use Cloud Stackdriver to trigger a script to preserve work.

- Increase the cluster size with preemptible worker nodes, and configure them to use graceful decommissioning.

Correct answer: D

Question 10

You work for a shipping company that uses handheld scanners to read shipping labels. Your company has strict data privacy standards that require scanners to only transmit tracking numbers when events are sent to Kafka topics. A recent software update caused the scanners to accidentally transmit recipients' personally identifiable information (PII) to analytics systems, which violates user privacy rules. You want to quickly build a scalable solution using cloud-native managed services to prevent exposure of PII to the analytics systems. What should you do?

- Create an authorized view in BigQuery to restrict access to tables with sensitive data.

- Install a third-party data validation tool on Compute Engine virtual machines to check the incoming data for sensitive information.

- Use Cloud Logging to analyze the data passed through the total pipeline to identify transactions that may contain sensitive information.

- Build a Cloud Function that reads the topics and makes a call to the Cloud Data Loss Prevention (Cloud DLP) API. Use the tagging and confidence levels to either pass or quarantine the data in a bucket for review.

Correct answer: D

Question 11

You have developed three data processing jobs. One executes a Cloud Dataflow pipeline that transforms data uploaded to Cloud Storage and writes results to BigQuery. The second ingests data from on-premises servers and uploads it to Cloud Storage. The third is a Cloud Dataflow pipeline that gets information from third-party data providers and uploads the information to Cloud Storage. You need to be able to schedule and monitor the execution of these three workflows and manually execute them when needed. What should you do?

- Create a Direct Acyclic Graph in Cloud Composer to schedule and monitor the jobs.

- Use Stackdriver Monitoring and set up an alert with a Webhook notification to trigger the jobs.

- Develop an App Engine application to schedule and request the status of the jobs using GCP API calls.

- Set up cron jobs in a Compute Engine instance to schedule and monitor the pipelines using GCP API calls.

Correct answer: A

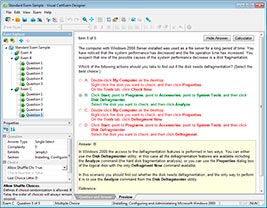

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

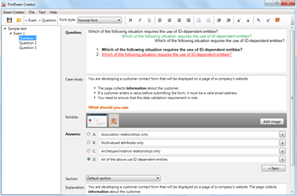

HOW TO OPEN VCEX AND EXAM FILES

Use ProfExam Simulator to open VCEX and EXAM files

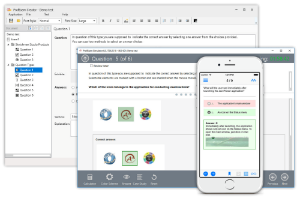

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!